BSc in Applied Mathematics Moscow Institute of Physics and Technology

Department of Control and Applied Mathematics

Thesis: Accelerated Directional Searchs and Gradient-Free Methods with non-Euclidean prox-structure

Advisor: Alexander Gasnikov

If you are willing to join/visit my group at MBZUAI, please check this page.

I am a Tenure-Track Assistant Professor of Statistics and Data Science at MBZUAI. My research interests include Stochastic Optimization and its applications to Machine Learning, Distributed Optimization, Derivative-Free Optimization, and Variational Inequalities.

Previously, I was a research scientist at MBZUAI (where I worked before as a postdoc), hosted by Samuel Horváth and Martin Takáč. Before joining MBZUAI, I worked as a junior researcher at MIPT and as a research consultant (remote postdoc) at Mila in the group of Gauthier Gidel. I obtained my PhD degree at MIPT, Phystech School of Applied Mathematics and Informatics, where I worked under the supervision of professors Alexander Gasnikov and Peter Richtárik.

In 2019, I got the Ilya Segalovich Award (highly selective).

Action Editor at TMLR (from 2024).

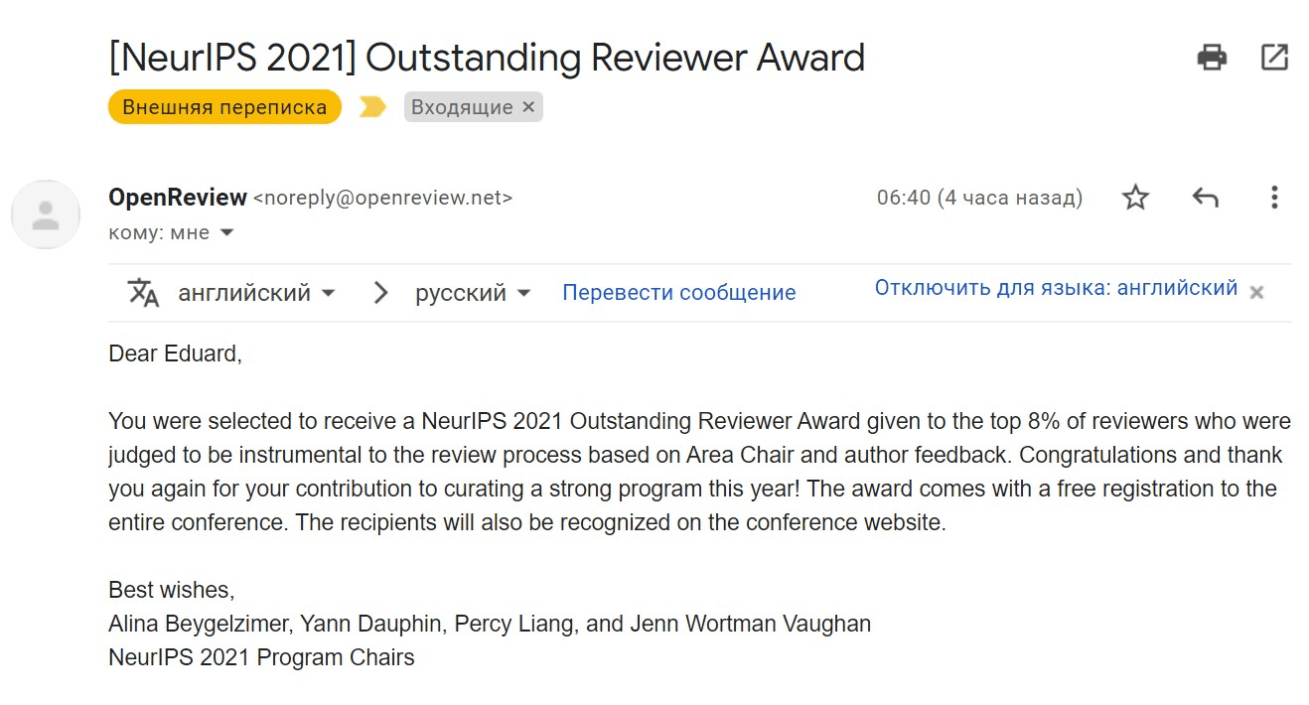

Area Chair at NeurIPS (2025)

Google Scholar

ResearchGate

arXiv

X (Twitter)

CV

E-mail: eduard.gorbunov@mbzuai.ac.ae

Sep 2014 - Jul 2018

Department of Control and Applied Mathematics

Thesis: Accelerated Directional Searchs and Gradient-Free Methods with non-Euclidean prox-structure

Advisor: Alexander Gasnikov

Sep 2018 - Jul 2020

Phystech School of Applied Mathematics and Informatics

Thesis: Derivative-free and stochastic optimization methods, decentralized distributed optimization

Advisor: Alexander Gasnikov

Sep 2020 - Dec 2021

Phystech School of Applied Mathematics and Informatics

Thesis: Distributed and Stochastic Optimization Methods with Gradient Compression and Local Steps Advisors: Alexander Gasnikov and Peter Richtárik

Links: slides and video of the defense

Aug 2017 - Oct 2019

Researcher at Peter Richtárik's group

Feb 2018 - Jun 2019

Teaching assistant for the following courses: Algorithms and Models of Computation, Probability Theory

May 2019 - Aug 2019

Intern in Media Laboratory (research, C++ programming)

Aug 2019 - Jul 2020

Researcher

Nov 2019 - Apr 2020

Junior researcher at Department of Mathematical Methods of Predictive Modelling

Feb 2020 - Dec 2020

Junior researcher at Laboratory of Numerical Methods of Applied Structural Optimization

Feb 2020 - Dec 2020

May 2020 - Aug 2022

Junior researcher at Laboratory of Advanced Combinatorics and Network Applications

May 2020 - Dec 2021

Research Assistant at International Laboratory of Stochastic Algorithms and High-Dimensional Inference

Sep 2020 - Jan 2022

Junior researcher at the joint Lab of Yandex.Research and Moscow Institute of Physics and Technology

Feb 2022 - May 2022

Research consultant at Mila, Montreal

Group of Gauthier Gidel

Sep 2022 - Mar 2024

Postdoctoral Fellow at MBZUAI, Abu Dhabi

Groups of Samuel Horváth and Martin Takáč

Apr 2024 - Jul 2025

Research Scientist at MBZUAI, Abu Dhabi

Groups of Samuel Horváth and Martin Takáč

Aug 2025 - Now

Assistant Professor of Statistics and Data Science

(Tenure-Track)

AI Website Generator